AI Agents: The 2026 Insider Threat You Can't Ignore

For years, insider threat programs have focused on people - disgruntled employees, compromised credentials, and simple human error. In 2026, that definition is breaking down fast.

The next major insider threat isn't just human. It's AI agents.

Autonomous AI systems are being embedded directly into enterprise workflows, granted access to sensitive data, and trusted to act on behalf of users. They're accelerating productivity, sure. But they're also quietly expanding the insider risk surface in ways most organizations are nowhere near prepared for.

A New Kind of Insider: Autonomous, Scaled, and Fast

Security leaders are already sounding the alarm. Industry predictions indicate that AI agents are on track to become one of the leading insider-risk drivers in 2026, not because they're malicious, but because they operate with broad permissions, limited oversight, and machine-speed execution.

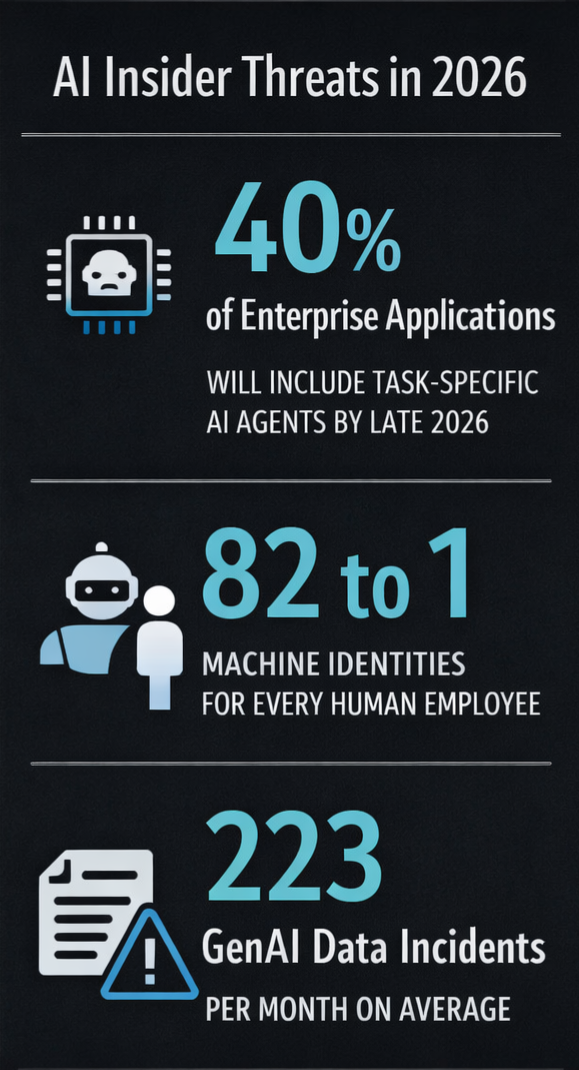

Here's the stat that should wake you up: by the end of 2026, roughly 40% of enterprise applications are expected to include task-specific AI agents. That's an explosive jump from single-digit adoption just a year earlier.

And here's the scale problem most organizations aren't grappling with yet: machine identities, including AI agents, now outnumber human employees by 82 to 1. When your AI workforce is 82 times larger than your human one, traditional identity and access management frameworks built for people simply can't keep pace.

Unlike traditional software, these agents act across multiple systems, inherit user or service-level privileges, and execute chains of actions autonomously. They can be manipulated through inputs, prompts, or misconfiguration. When something goes wrong, the impact scales instantly.

The 2026 Numbers You Need to Know

AI risk isn't theoretical. The data already shows a sharp rise in AI-driven exposure tied directly to insider behavior, both intentional and accidental.

Organizations now average 223 GenAI-related data policy violations per month, more than doubling year over year. Among the top 25% of organizations, that number jumps to 2,100 incidents per month. Nearly half (47%) of enterprise AI usage occurs through unsanctioned or "Shadow AI" tools, completely outside IT and security visibility. Over 60% of insider incidents are now linked to personal cloud apps and unsanctioned services, many of which include AI features.

GenAI traffic experienced an explosive surge of over 890% in the past year, reflecting how quickly these tools have moved from experimental to essential. And here's the protection gap: only 50% of organizations have deployed data loss prevention tools to prevent sensitive data from leaking via GenAI apps.

This isn't a future problem. AI isn't just creating new external attack vectors. It's amplifying insider risk right now through speed, scale, and invisibility. Looking ahead, Gartner predicts that by 2028, 25% of enterprise breaches will trace back to AI agent abuse.

Why AI Agents Break the Traditional Model

Traditional insider threat frameworks were built around a human actor with clear intent - malicious or negligent - whose actions unfold over time and can be observed. AI agents shatter those assumptions.

They don't have intent, but they do have persistent access, the ability to chain actions together, dependence on inputs that can be manipulated, and the power to move and transform data faster than humans ever could.

A single compromised agent, mis-prompted workflow, or poorly governed AI integration can behave like a high-privilege insider operating at machine speed. Meanwhile, human employees are increasingly interacting with AI tools in ways that blur accountability: pasting sensitive data into AI prompts, using personal AI accounts for work, relying on copilots to summarize or generate content without understanding where data goes.

What we're seeing is a hybrid insider threat landscape - part human, part machine - where visibility gaps create serious risk. And most security programs aren't built for it.

Why Blocking AI Isn't the Answer

Some organizations respond by trying to restrict or block AI usage outright. In practice, this rarely works. Employees adopt AI because it genuinely increases productivity. When official pathways are too restrictive, usage simply moves into the shadows, making risk harder to detect, not easier to control.

The smarter approach is visibility with context: who is using AI tools, how frequently, with what types of data, in what business context, and how that behavior changes over time.

How InnerActiv Approaches AI-Driven Insider Risk

As insider risk expands beyond people to include AI agents, copilots, and automated workflows, security teams need more than simple detection. They need understanding.

The answer isn't blocking all AI traffic or opening the freeway to unrestricted AI usage. Both extremes create problems: blocking drive usage underground into shadow AI, while unrestricted access exposes sensitive data at scale. What organizations need is visibility and guardrails into AI usage and access.

InnerActiv treats AI-driven insider risk as a behavioral and data-centric problem, not a checkbox exercise. We analyze user behavior and activity patterns to surface risk, including anomalies tied to AI-assisted workflows. This makes it possible to identify when AI usage shifts from normal productivity into potential exposure. Security teams gain insight into how AI tools are used across roles and groups, helping distinguish legitimate business use from misuse, over-sharing, or policy drift.

We track how employees access, interact with, and duplicate data, even when AI tools are involved. This provides visibility into sensitive data flowing into AI systems, being transformed by them, or resurfacing elsewhere. By correlating AI usage with user behavior, data sensitivity, and historical activity, InnerActiv enables proportionate responses - reducing false positives while catching real risk earlier.

In a world where AI agents increasingly behave like digital insiders, visibility without context isn't enough.

The Reality: Insider Threat Is No Longer Just Human

In 2026, insider risk is no longer a single category. It's a spectrum that includes employees, AI assistants, autonomous agents, and the interactions between them.

Organizations that treat AI as just another tool will struggle to keep up. Those who understand how AI changes behavior, data flow, and risk will be positioned to innovate securely.

The future of insider risk isn't about stopping AI. It's about understanding it and securing it with context.

Layoffs Are an HR Event. They’re Also a Security Event.

The moment a termination notice goes out, the clock starts ticking. Employees who are about to lose their jobs, or who already know they're on the list, don't always wait to be escorted out before they start moving data. And in a workplace where AI tools can summarize, package, and transfer large volumes of information in minutes, that data moves faster and leaves a smaller trail than it ever has before.

Paid Insiders Are the New Attack Vector. AI Is Making It Worse

A recent article from Cybersecurity Insiders highlighted an emerging trend: threat actors are actively recruiting employees inside telecommunications providers, banks, and technology companies, offering direct payment for access to systems, data, or operational assistance. Rather than hacking in from the outside, attackers are increasingly buying legitimate access from within.

Shadow AI Isn't the Problem. Blind AI Is.

A 2025 survey of over 12,000 white-collar employees found that 60% had used AI tools at work, but only 18.5% were aware of any official company policy regarding AI use. That's not a workforce acting in defiance. That's a workforce operating without guidance in an environment that never gave them any.